Commentary on Michael A. Arbib

Word Counts:

Abstract: 58 words

Main Text: 1011 words

References: 211 words

Total Text: 1329 words

Eytan Ruppin

Abstract: This commentary validates the fundamental evolutionary interconnection between the emergence of imitation and the mirror system. We present a novel computational framework for studying the evolutionary origins of imitative behavior and examining the emerging underlying mechanisms. Evolutionary adaptive agents that evolved in this framework demonstrate the emergence of neural "mirror" mechanisms analogous to those found in biological systems.

Uncovering the evolutionary origins of neural mechanisms is bound to be a difficult task; fossil records or even genomic data can provide very little help. Hence, the author of the target article should be commended for laying out a comprehensive and thorough theory for the evolution of imitation and language. In particular, considering the first stages in the evolution of language Arbib argues that the mirror system initially evolved to provide a visual feedback on one's own action, bestowing also the ability to understand the actions of others (stage S2), and that further evolution was required for this system to support the copying of actions and eventually imitation (stages S3 and S4). However, the functional link between the mirror system and the capacity to imitate, although compelling, has not yet been demonstrated clearly. We wish to demonstrate that the existence of a mirror system, capable of matching the actions of self to observed actions of others, is fundamentally linked to imitative behavior and that, in fact, the evolution of imitation promotes the emergence of neural mirroring.

Neurally-driven evolutionary adaptive agents (Ruppin, 2002) form an appealing and intuitive approach for studying and obtaining insights into the evolutionary origins of the mirror system. These agents, controlled by an artificial neural-network "brain", inhabit an artificial environment and are evaluated according to their success in performing a certain task. The agents' neurocontrolers evolve via genetic algorithms that encapsulate some of the essential characteristics of natural evolution (e.g. inheritance, variation and selection).

We have recently presented such a novel computational model for studying the emergence of imitative behavior and the mirror system (Borenstein and Ruppin, 2004). In contradistinction to previous engineering-based approaches that explicitly incorporate biologically inspired models of imitation (Billard, 2000; Oztop and Arbib, 2002; Marom et al., 2002; Demiris and Hayes, 2002; Demiris and Johnson, 2003), we employ an evolutionary framework and examine the mechanism that evolved to support imitation. Being an emerging mechanism (rather than an engineered one), we believe it is likely to share the same fundamental principles driving natural systems.

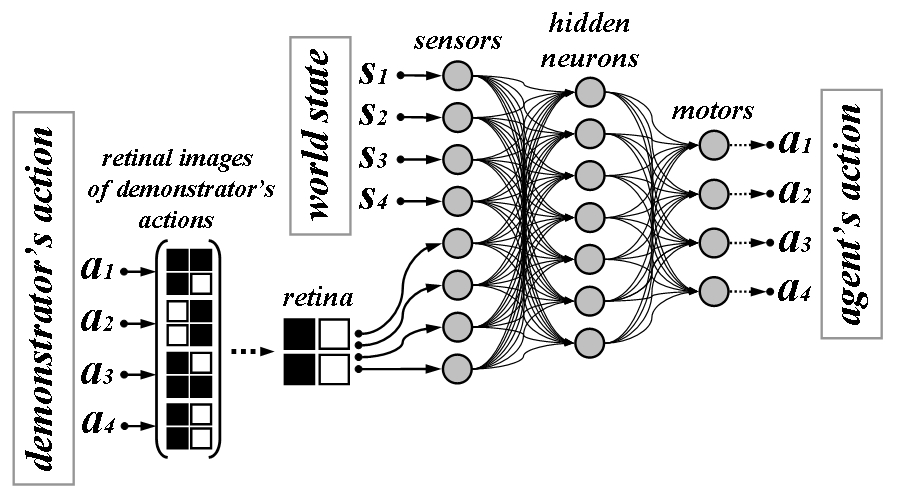

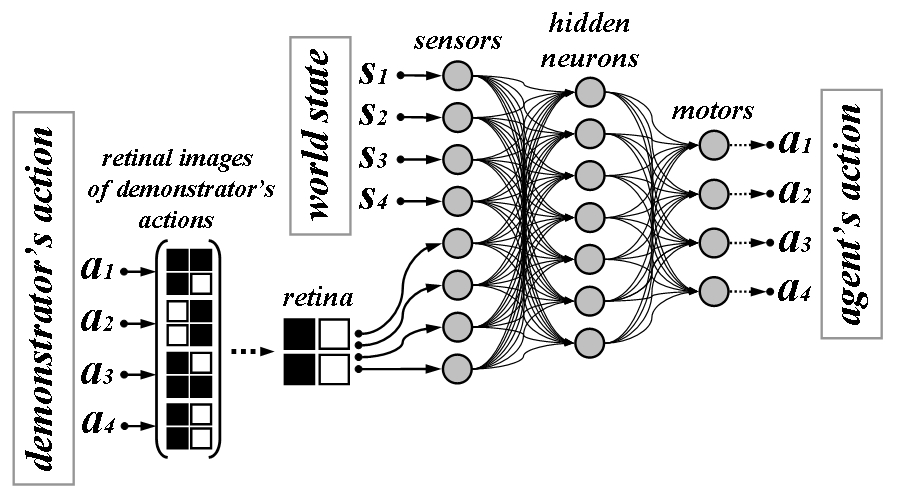

In our model, a population of agents evolves to successfully perform specific actions according to certain environmental cues. Each agent's controller is an adaptive neural network, wherein synaptic weights can vary over time according to various Hebbian learning rules. The genome of these agents thus encodes not only the initial synaptic weights but also the specific learning rule and learning rate that govern the dynamics of each synapse (Floreano and Urzelai, 2000). Agents are placed in a changing environment that can take one of several "world-states" and should learn to perform the appropriate action in each world state. However, the mapping between the possible world-states and appropriate actions is randomly selected anew in the beginning of the agent's life, preventing a successful behavior from becoming genetically determined. Agents can infer the appropriate state-action mapping only from an occasional retinal-sensory input of a demonstrator, successfully performing the appropriate action in each world state (Figure 1). These settings promote the emergence of an imitation-based learning strategy although no such strategy is explicitly introduced into the model.

Figure 1: The agent's sensorimotor system and neurocontroller. The sensory input is binary and includes the current world state and a retinal "image" of the demonstrator's action (when visible). The retinal image for each possible demonstrator's action and a retinal input example are illustrated. The motor output determines which actions are executed by the agent. The network synapses are adaptive and their connection strength may change during life according to the specified learning rules.

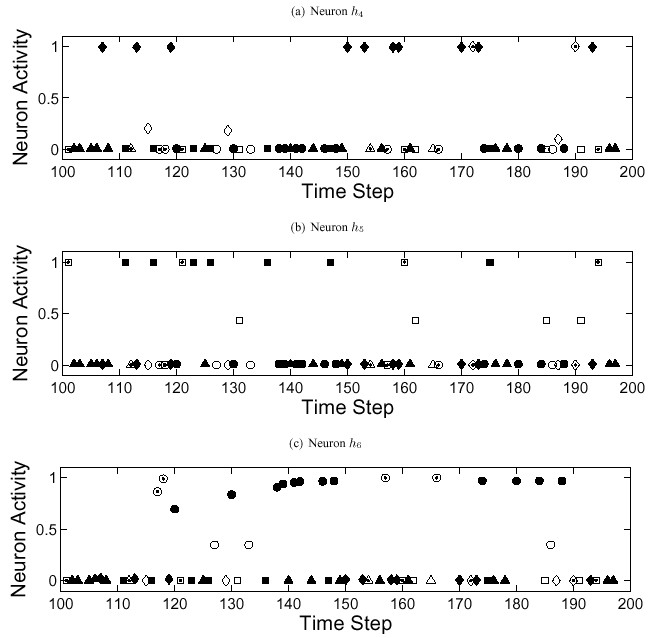

Applying this model, we successfully developed evolutionary adaptive agents capable of learning by imitation. After only a few demonstrations, agents successfully master the behavioral task, regularly executing the proper action in each world state. Moreover, examining the dynamics of the neural-mechanisms that have emerged, we found that many of these agents embody a neural mirroring device analogous to that found in biological systems. That is, certain neurons in the network's hidden layer are each associated with a certain action and discharge only when this action is either executed by the agent or observed (Figure 2). Further analysis of these networks reveals complex dynamics, incorporating both pre-wired perceptual-motor coupling with learned state-action associations, to accomplish the required task.

Figure 2: The activation level of 3 hidden neurons in a specific successful agent during time steps 100-200. Circles, squares, diamonds and triangles represent the 4 possible actions in the repertoire. An empty shape indicates that the action was only observed but not executed, a filled shape indicates that the action was executed by the agent (stimulated by a visible world state) but not observed, and a dotted shape indicates time steps in which the action was both observed and executed. Evidently, each of these neurons is associated with one specific action and discharge whenever this action is observed or executed.

This framework provides a fully accessible, yet biologically plausible, distilled model for imitation and can serve as a vehicle to study the mechanisms that underlie imitation in biological systems. In particular, this simple model demonstrates the crucial role of the mirror system in imitative behavior; in our model, mirror neurons' emergence is derived solely from the necessity to imitate observed actions. These findings validate the strong link between the capacity to imitate and the ability to match observed and executed actions and thus support Arbib's claim for the functional links between the mirror system and imitation. However, while Arbib hypothesizes that the evolution of the mirror system preceded the evolution of imitation, this model additionally suggests an alternative possible evolutionary route, grounding the emergence of mirror-neurons in the evolution of imitative behavior. Evidently, at least in this simple evolutionary framework, neural mirroring can co-evolve in parallel with the evolution of imitation. We believe that evolutionary adaptive agents models, such as the one described above, form a promising test-bed for studying the evolution of various neural mechanisms that underlie complex cognitive behaviors. Further research of artificially evolving systems can shed new light on some of the key issues concerning the evolution of perception, imitation and language.

References

Billard, A. (2000). Learning motor skills by imitation: a biologically inspired robotic model. Cybernetics & Systems, 32:155-193.

Borenstein, E. and Ruppin, E. (2004). Evolving imitating agents and the emergence of a neural mirror system. In Artificial Life IX: Proceedings of the Ninth International Conference on the Simulation and Synthesis of Living Systems. MIT Press.

Demiris, Y. and Hayes, G. (2002). Imitation as a dual-route process featuring predictive and learning components: a biologically plausible computational model. In Dautenhahn, K. and Nehaniv, C., editors, Imitation in Animals and Artifacts. The MIT Press.

Demiris, Y. and Johnson, M. (2003). Distributed, predictive perception of actions: a biologically inspired robotics architecture for imitation and learning. Connection Science Journal, 15(4):231-243.

Floreano, D. and Urzelai, J. (2000). Evolutionary robots with on-line self-organization and behavioral fitness. Neural Networks, 13:431-443.

Marom, Y., Maistros, G., and Hayes, G. (2002). Toward a mirror system for the development of socially-mediated skills. In Prince, C., Demiris, Y., Marom, Y., Kozima, H., and Balkenius, C., editors, Proceedings Second International Workshop on Epigenetic Robotics: Modeling Cognitive Development in Robotic Systems, volume 94, Edinburgh, Scotland.

Oztop, E. and Arbib, M. (2002). Schema design and implementation of the grasp-related mirror neuron system. Biological Cybernetics, 87:116-140.

Ruppin, E. (2002). Evolutionary autonomous agents: A neuroscience perspective. Nature Reviews Neuroscience, 3(2):132-141.